promptly evaluating Prompts

Bayesian Thunderdome

Generative Models

Typeface-off

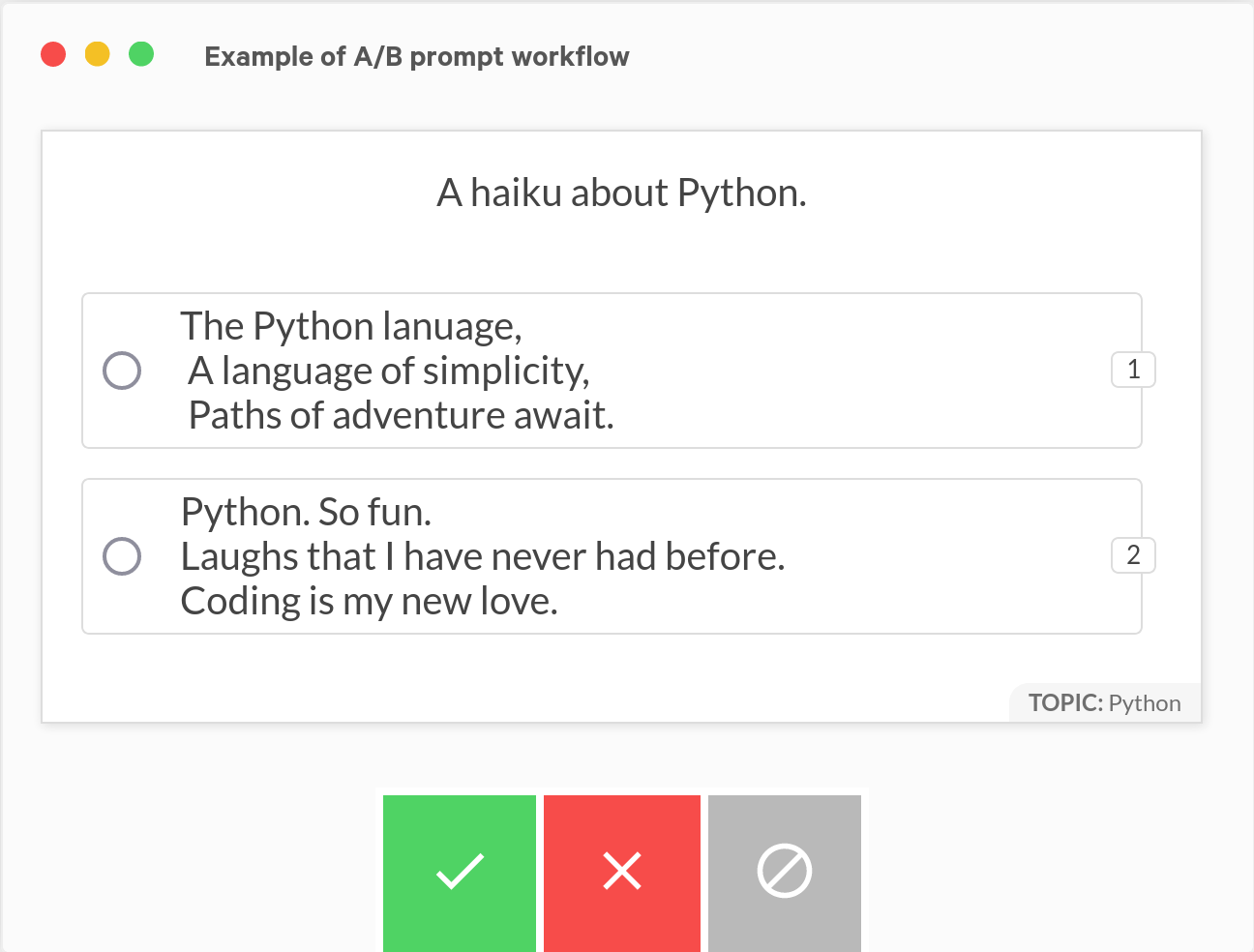

- Prompts hard to objectively evaluate

- LLM Generation

- Stable Diffusion

- Easier to choose A/B

Problem

A fist full of decisions

- Many Choices

- Paired Comparisons

- Noisy Outcomes

- Transitivity

- Add new options at any time

Solution

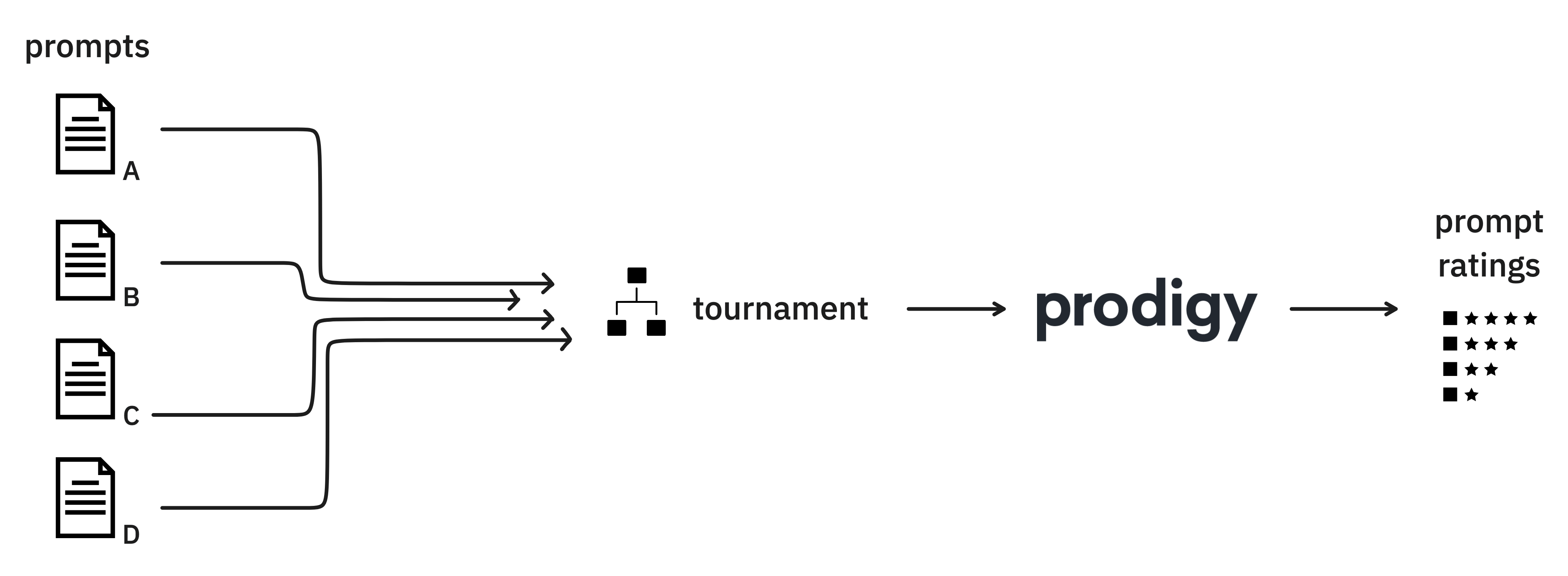

N prompts enter,

1 prompt leaves

- GlickBest Algorithm ⭐

- Glicko Ratings

- Exploration Maxx'ing

Glicko

Glicko

Glickman & Round-robin- Rating $(1500)$

- Ratings Deviation $(1500 ± 50)$

- Closed-form Update

Bradley Terry Model

\[\begin{aligned} \text{odds} & = 10^{\frac{r_j - r_i}{400}} \\ \end{aligned} \]Who Wins?

| $Δ = r_j - r_i$ | Odds | Win% |

| +100 | 9:5 | 64% |

| +200 | 16:5 | 76% |

| +400 | 10:1 | 91% |

| +800 | 100:1 | 99% |

➡️ update

ratings $±$ uncertainty

Pure

Exploration

BDSM

Bayesian Descisions using Sequential Matches

Key Idea

Play the $i$ most likely to be $1^{\text{st}}$,

against $j$ that is $2^{\text{nd}}$ most likely to be $1^{\text{st}}$

This is subtle!

The runner up is not the option

with the second highest rating.

Head to Head

Other Benefits

- Late entrants

(useful for iterative development) - Ratings for every option

- Ranking probabilities

(Keep going until 95% confident)

Variants

- Thompson Sampling (easy)

- Many-way Comparisons

- Maximize total ranking information (easy?)

- Load spreading

Stuff

- GlickBest is "sticky"

-

No look ahead,

hurts few comparisons

Get GlickBest

GitHub repo

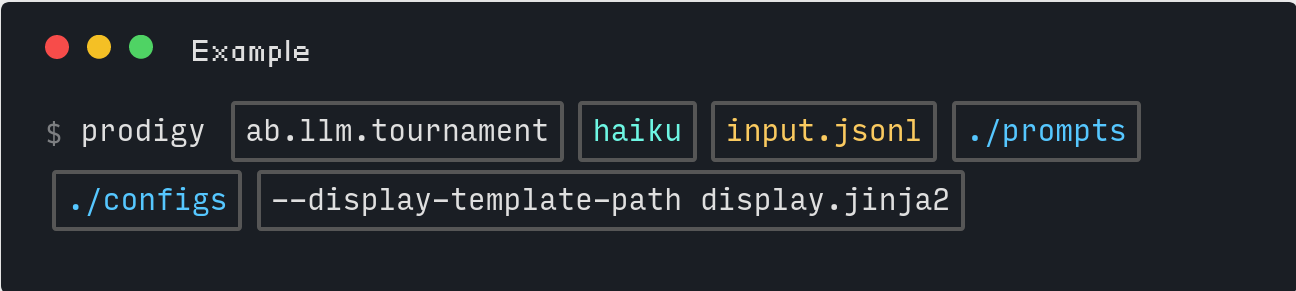

(Minimal reference implementation) github.com/andykitchen/glickbestNow in Prodigy

prodi.gy/features/prompt-engineering

Questions

Thank you

Please